Topic: Models And Releases

Anthropic unveils ‘auditing agents’ to test for AI misalignment

Anthropic developed its auditing agents while testing Claude Opus 4 for alignment issues....

Key Takeaways:

- Anthropic's auditing agents demonstrate promise across multiple alignment auditing tasks and can significantly help scale human oversight over AI systems.

- The agents successfully uncovered hidden goals, built safety evaluations, and surfaced concerning behaviors in AI models.

- Further work on automating alignment auditing with AI agents is necessary to address the limitations of the current approach.

Figma’s AI app building tool is now available for everyone

Figma Make, the prompt-to-app coding tool that Figma introduced earlier this year, is now available for all users. Similar to AI coding tools like Goo...

Key Takeaways:

- Figma Make allows users to build working prototypes and apps using natural language descriptions without requiring innate coding skills.

- The tool includes design references and offers individual element adjustments via AI prompts or manual editing.

- A new AI credit system is introduced, offering limited access to AI tools for lower-tier users and unlimited access for Full Seat users.

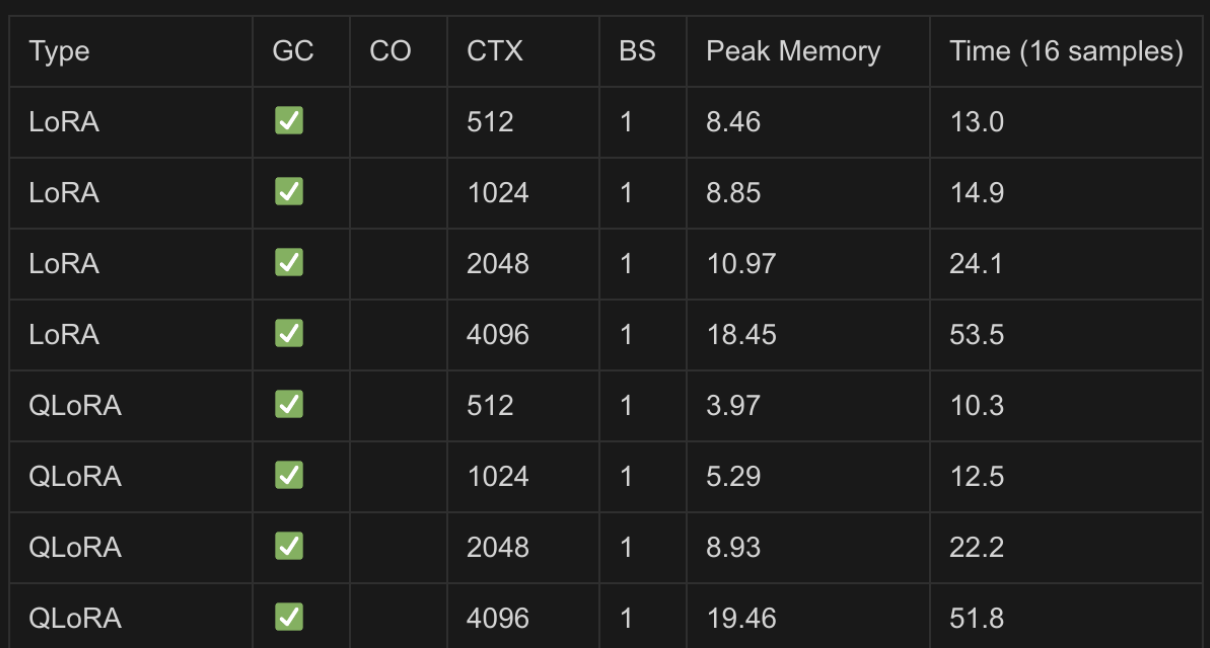

You can now train a 70B language model at home

Article URL: https://www.answer.ai/posts/2024-03-06-fsdp-qlora.html Comments URL: https://news.ycombinator.com/item?id=44674830 Points: 29 # Comments:...

Key Takeaways:

- The system combines FSDP and QLoRA to efficiently train large models with consumer GPUs, reducing the cost and making AI more accessible to everyone.

- The project is part of Answer.AI's mission to provide a foundation for creating personalized models and making AI available to everyone, regardless of hardware constraints.

- The FSDP/QLoRA system has been successfully tested and can be used to train large language models, with plans for further improvements and community-based development.

Resolving digital threats 100x faster with OpenAI

Discover how Outtake uses GPT-4.1 and OpenAI o3 to power AI agents that detect and resolve digital threats 100x faster than before....

Key Takeaways:

- Uses GPT-4.1 and OpenAI o3 to power AI agents

- Detects and resolves digital threats 100x faster

- Powers AI agents for digital threat detection

Building MCP servers for ChatGPT and API integrations

Article URL: https://platform.openai.com/docs/mcp Comments URL: https://news.ycombinator.com/item?id=44676066 Points: 47 # Comments: 18...

Key Takeaways:

- Achieves up to a 20x performance increase in large language model inference over the H100 generation.

- Features a second-generation Transformer Engine and innovative tensor core technology.

- Cloud providers AWS, Google Cloud, and Azure have committed to adopting the new architecture.

Qwen-MT: Where Speed Meets Smart Translation

DEMO API DISCORD Introduction Here we introduce the latest update of Qwen-MT (qwen-mt-turbo) via Qwen API. This update builds upon the powerful Qwen3,...

Key Takeaways:

- Qwen-MT provides high-quality translations across 92 major official languages and prominent dialects, covering over 95% of the global population.

- The new version offers advanced translation capabilities such as terminology intervention, domain prompts, and translation memory, with customizable prompt engineering and low latency & cost efficiency.

- Qwen-MT achieves competitive translation quality while maintaining a lightweight architecture, enabling rapid translation processing and optimized translation performance.

Anthropic’s New Research: Giving AI More "Thinking Time" Can Actually Make It Worse

GLM-4.5 Is About to Be Released

Qwen 3 Thinking is coming very soon

Higgs Audio V2: A New Open-Source TTS Model with Voice Cloning and SOTA Expressiveness

Qwen's third bomb: Qwen3-MT

Tested Kimi K2 vs Qwen-3 Coder on 15 Coding tasks - here's what I found

We just open sourced NeuralAgent: The AI Agent That Lives On Your Desktop and Uses It Like You Do!

OpenAI open source model likely coming July 31

Demolishing chats in Claude

Claude Opus 4 is writing better contracts than lawyers (and explaining them too). Here is the prompt you need to save thousands in legal fees

Is there a future for local models?

Tested Kimi K2 vs Qwen-3 Coder on 15 Coding tasks - Sonnet 4 is ahead but not far ahead

You can now create custom subagents for specialized tasks! Run /agents to get started

2 years later....."You're absolutely right!"

I tasked Generative AI with removing that stop sign and it gave me...