AI news for: Llm Inference

Explore AI news and udpates focusing on Llm Inference for the last 7 days.

Ollama Web search

Article URL: https://ollama.com/blog/web-search Comments URL: https://news.ycombinator.com/item?id=45377641 Points: 32 # Comments: 6...

Ollama now provides a web search API that can be integrated with AI models to reduce hallucinations and improve accuracy, available for free with higher rate limits through Ollama’s cloud.

Key Takeaways:

Key Takeaways:

- Provides a web search API to augment AI models with the latest information from the web.

- Available for use in models such as OpenAI's gpt-oss models, enabling long-running research tasks.

- Recommended for building search agents, especially with models like qwen3 and gpt-oss, which have great tool-use capabilities and support multi-turn interactions.

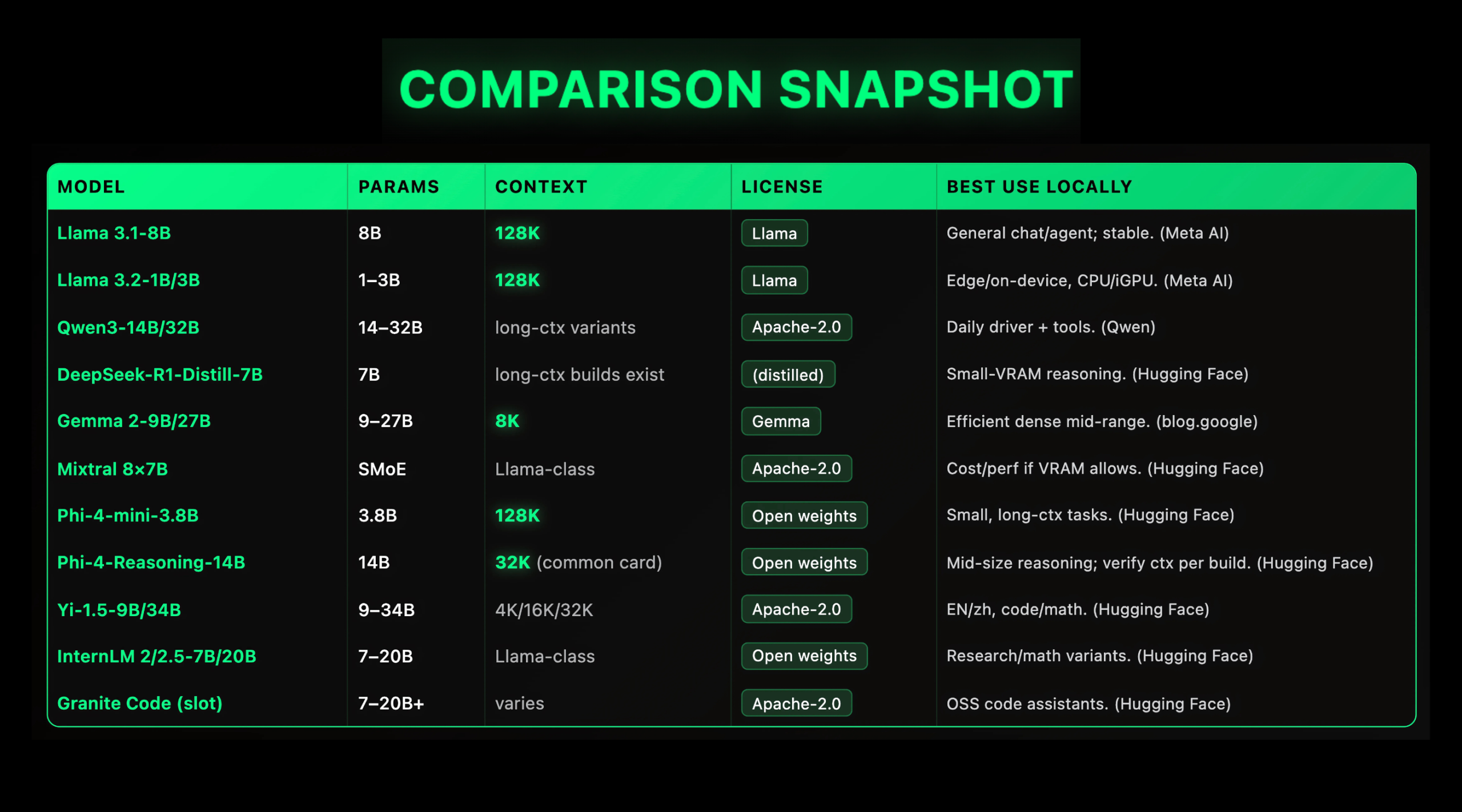

Top 10 Local LLMs (2025): Context Windows, VRAM Targets, and Licenses Compared

Local LLMs matured fast in 2025: open-weight families like Llama 3.1 (128K context length (ctx)), Qwen3 (Apache-2.0, dense + MoE), Gemma 2 (9B/27B, 8K...

The latest list of top 10 local large language models (LLMs) for 2025 highlights Meta Llama 3.1-8B as the most deployable option due to its stable performance and first-class support across local toolchains.

Key Takeaways:

Key Takeaways:

- For optimal performance, choose between dense models like Llama 3.1-8B and sparse MoE models like Mixtral 8×7B depending on available VRAM and parallelism.

- Licensing and ecosystems, such as Qwen3's Apache-2.0 releases, play a crucial role in determining the operational guardrails for local LLMs.

- Standardize on GGUF/llama.cpp for portability and consider layering Ollama/LM Studio for convenience and hardware offload when implementing local LLMs.

29

Sep

28

Sep

27

Sep

26

Sep

25

Sep

24

Sep

23

Sep